Kaiko Project 5 Gameplay

May 2025

During my full-time employment at Kaiko, I was responsible for several gameplay features, ranging from player traversal to enemy AI. All work was done for Project 5, an unreleased title based on a well-known THQ Nordic IP. The game was developed using an Entity Component System (ECS) architecture, where entities were defined by their components, and logic was implemented through dedicated systems operating on those components. Each component stored data and configuration specific to its associated entity, which was managed with the help of proprietary tools and converters.

Throughout the process, I collaborated closely with a number of other departments, including Game Design, Art, Animation, VFX, Rendering, and Level Design. Many features were the result of tight cross-disciplinary coordination, and I was fortunate to work alongside incredibly skilled and talented people, many of whom I will mention later in this post.

Contents of this post:

- Wall Movement System

- Steering System

- Interactable System

- Carriable System

- Quick Time Event System

- Boss Battle

Wall Movement System

This system handles both vertical and horizontal wall runs. One experimental variation allowed the player to manually switch wall run direction upon reaching designated wall run extenders, while the default behavior extended the wall run automatically in the same direction. During a wall run, if a designated wall-run target - such as a wall run extender or a horizontal rail - is detected, the movement curve dynamically adjusts to guide the player toward a predefined position relative to that target. From there, a transition animation can begin. If an extender is detected below a falling player, the system either pulls the player toward the extender to initiate a “jump-over” animation or pulls away if a valid landing cannot be predicted.

There are multiple transitions into a wall scrape state - for example, after a vertical wall run with no valid target, or when failing a corner jump during a horizontal wall run. The horizontal rail traversal system, also shown in the video, was another feature I contributed to, having taken over the feature from a former developer on the team.

Vertical (left) and horizontal (right) wall runs with auto-extenders.

Mixed wall runs with manual extenders.

Manual extenders include an idle timeout, after which the character automatically drops. Wall-running is only permitted in directions marked with a special wall-runnable material tag, which also indicates the type of wall run (vertical or horizontal).

While the player is airborne, nearby walls are detected using raycasts and evaluated against several conditions - such as the presence of the material tag, wall orientation, and whether the wall is part of a corner. This detection system provides a list of all valid wall run directions, but the final choice still depends on the player’s input.

Code example of how the nearby walls are analyzed for a potential wall run.

simulation::WallMovementUtility::WallCollisionResult getWallCollisionResult(

const keen::Vector3& position,

const keen::Angle& playerDirection,

const physics::PhysicsWorld& physicsWorld,

bool processWallRun,

simulation::WallMovementUtility::WallTestMode testMode)

{

using namespace simulation;

const WallMovementUtility::WallTestParameters& wallTestParameters = WallMovementUtility::getWallTextParameters(testMode);

WallMovementUtility::WallCollisionResult wallCollisionResult;

keen::fillMemoryWithZero(&wallCollisionResult, sizeof(wallCollisionResult));

keen::Vector3 rayStartPosition(position);

keen::Vector3 endPositionFrontOfCharacter(rayStartPosition);

keen::Vector3 directionVector;

directionVector.set2DDirection(playerDirection);

endPositionFrontOfCharacter.addScaled(directionVector, WallMovementUtility::getFrontWallCastLength());

keen::Vector3 rayStartHandsPosition(rayStartPosition);

keen::Vector3 rayEndHandsPositionFrontOfCharacter(endPositionFrontOfCharacter);

const float heightFactor = 1.4f;

rayStartHandsPosition.y += heightFactor * HorizontalRailSystem::getRailUserHeightOffset();

rayEndHandsPositionFrontOfCharacter.y += heightFactor * HorizontalRailSystem::getRailUserHeightOffset();

physics::CastResult upperFrontHitResult;

const bool hasUpperFrontRayHit = physicsWorld.castRay(&upperFrontHitResult, rayStartHandsPosition, rayEndHandsPositionFrontOfCharacter, physics::CollisionCaster_EntityMovement);

const bool hasCloseFrontDiagonalHits = wallTestParameters.skipCornerTest || hasCloseDiagonalHits(position, playerDirection, physicsWorld);

const bool isAtEdge = !hasCloseFrontDiagonalHits && hasUpperFrontRayHit;

const bool isAtGap = hasCloseFrontDiagonalHits && !hasUpperFrontRayHit;

bool hasBothValidRayHitsForVertical = false;

bool hasBothValidRayHitsForHorizontal = false;

bool isValidForWallScrape = false;

wallCollisionResult.nearCastWallHitNormal.clear();

if (processWallRun && !isAtEdge)

{

hasBothValidRayHitsForVertical = hasUpperFrontRayHit && isVerticallyRunnable(upperFrontHitResult, physicsWorld);

if (wallTestParameters.alwaysTestBothAxes || !hasBothValidRayHitsForVertical)

{

if (hasUpperFrontRayHit

&& (wallTestParameters.skipNearHorizontalWallCheck || isHorizontallyRunnable(upperFrontHitResult, physicsWorld))

&& (isFarCastHorizontallyRunnable(&wallCollisionResult.hasAvailableDirectionRight, true, upperFrontHitResult, rayStartHandsPosition, rayEndHandsPositionFrontOfCharacter, physicsWorld )

|| isFarCastHorizontallyRunnable(&wallCollisionResult.hasAvailableDirectionLeft, false, upperFrontHitResult, rayStartHandsPosition, rayEndHandsPositionFrontOfCharacter, physicsWorld)))

{

// check the left side still, in case we have a valid wall run from both sides

if (wallCollisionResult.hasAvailableDirectionRight)

{

isFarCastHorizontallyRunnable(&wallCollisionResult.hasAvailableDirectionLeft, false, upperFrontHitResult, rayStartHandsPosition, rayEndHandsPositionFrontOfCharacter, physicsWorld);

}

wallCollisionResult.nearCastWallHitNormal = upperFrontHitResult.hitNormal;

hasBothValidRayHitsForHorizontal = true;

}

else if(!wallTestParameters.skipSideWallTests)

{

keen::Vector3 endPositionLeftOfCharacter(rayStartPosition);

keen::Vector3 endPositionRightOfCharacter(rayStartPosition);

directionVector.clear();

directionVector.set2DDirection(keen::normalizeAngle(playerDirection - KEEN_ANGLE_QUARTER * 0.5f));

endPositionLeftOfCharacter.addScaled(directionVector, WallMovementUtility::getSideWallCastLength());

directionVector.set2DDirection(keen::normalizeAngle(playerDirection + KEEN_ANGLE_QUARTER * 0.5f));

endPositionRightOfCharacter.addScaled(directionVector, WallMovementUtility::getSideWallCastLength());

// only cast the second ray if the first one was successful AND hit the valid wall running material

physics::CastResult nearSideCastResult;

bool hasFirstSideRayHit = physicsWorld.castRay(&nearSideCastResult, rayStartPosition, endPositionLeftOfCharacter, physics::CollisionCaster_EntityMovement);

if (hasFirstSideRayHit && isHorizontallyRunnable(nearSideCastResult, physicsWorld))

{

wallCollisionResult.nearCastWallHitNormal = nearSideCastResult.hitNormal;

// hit the wall from the left side; so cast the second ray to the right of the character:

hasBothValidRayHitsForHorizontal = isFarCastHorizontallyRunnable(&wallCollisionResult.hasAvailableDirectionRight, true, nearSideCastResult, rayStartPosition, endPositionLeftOfCharacter, physicsWorld);

}

else

{

hasFirstSideRayHit = physicsWorld.castRay(&nearSideCastResult, rayStartPosition, endPositionRightOfCharacter, physics::CollisionCaster_EntityMovement);

if (hasFirstSideRayHit && isHorizontallyRunnable(nearSideCastResult, physicsWorld))

{

wallCollisionResult.nearCastWallHitNormal = nearSideCastResult.hitNormal;

// hit the wall from the right side; so cast the second ray to the left of the character:

hasBothValidRayHitsForHorizontal = isFarCastHorizontallyRunnable(&wallCollisionResult.hasAvailableDirectionLeft, false, nearSideCastResult, rayStartPosition, endPositionRightOfCharacter, physicsWorld);

}

}

}

}

}

if (hasUpperFrontRayHit && !(hasBothValidRayHitsForHorizontal || hasBothValidRayHitsForVertical) && !isAtEdge)

{

const keen::Vector3 wallNormalVector = upperFrontHitResult.hitNormal;

keen::Vector3 planarVector;

planarVector.set2DDirection((wallNormalVector.get2DDirection()));

const float cosAngle = wallNormalVector.dotProduct(planarVector);

const bool isAngleScrapeable = (cosAngle >= (float)cos(KEEN_ANGLE_FROM_DEG(PlayerControllerSystem::s_wallScrapeThreshold))) && (cosAngle <= 1.0f); // 90 > angle > 70

if (isAngleScrapeable)

{

isValidForWallScrape = hasUpperFrontRayHit && isScrapeable(upperFrontHitResult, physicsWorld);

}

}

wallCollisionResult.frontHitResult = upperFrontHitResult;

wallCollisionResult.hasFrontHit = hasUpperFrontRayHit;

wallCollisionResult.isAtWallEdge = isAtEdge;

wallCollisionResult.isAtWallGap = isAtGap;

wallCollisionResult.isValidForHorizontalWallRun = hasBothValidRayHitsForHorizontal;

wallCollisionResult.isValidForVerticalWallRun = hasBothValidRayHitsForVertical;

wallCollisionResult.isValidForWallScrape = isValidForWallScrape;

return wallCollisionResult;

}Steering System

The steering system was implemented by combining the notion of context maps with the steering behaviors by Craig W. Reynolds.

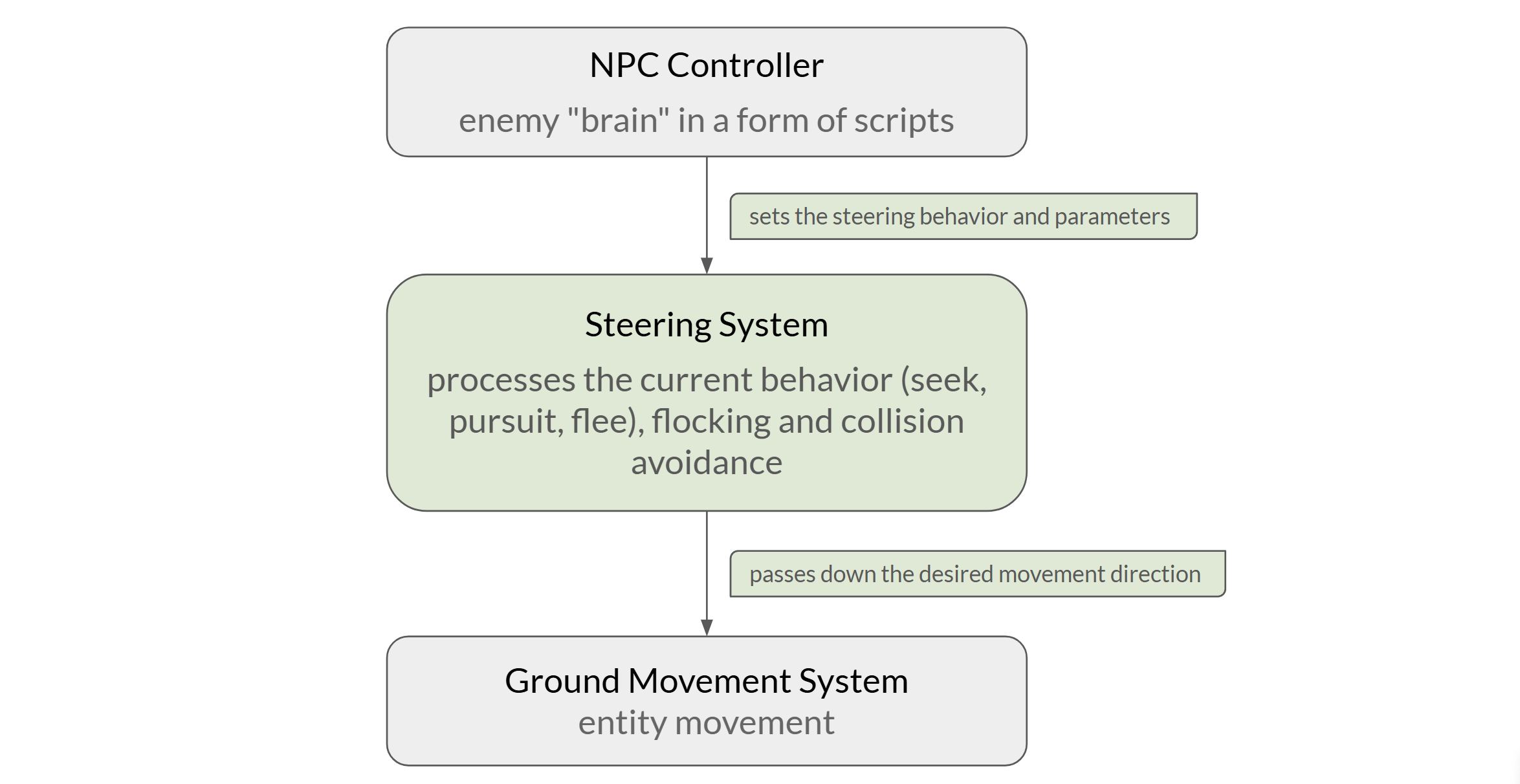

System hierarchy. The NPC controller was built by Tobias Opfermann, ground movement system by Thomas Iwanetzki.

System hierarchy. The NPC controller was built by Tobias Opfermann, ground movement system by Thomas Iwanetzki.

In the video examples, the NPC controller sets the desired behavior - such as pursuing a dynamic target (the player), fleeing from the target (after attacking), or seeking the spawn position (when the player moves out of range).

The system uses two direction maps an interest map and a danger map. The maps are represented by arrays of weights, each entry mapped onto a direction in world space. Map entries are generated through “contributions” from various steering forces, which are visualized as yellow debug text in the videos. Contributions from attractive forces are written into the interest map, while repelling forces populate the danger map. In the end, after both maps are normalized and superimposed, the result is resolved into a single direction. To reduce jitter, a running mean is applied to smooth out directional changes.

Attracting forces include: pursuit/pursuit with offset, seek, flee and flocking behaviors. Repelling forces consist of static collision avoidance and dynamic collision avoidance (e.g., player and NPC movement). In the videos below, entities and their resulting desired directions are visualized with circles and debug lines - red for the player (target) and blue for the NPCs. Each enemy reserves one attack slot around the player (attack scheduler feature that provides these slots developed by Tobias Opfermann), which becomes the offset for the pursuit target (pursuit with offset behavior). These target positions are marked by magenta crosses. Weighted danger directions are displayed as red lines around the enemies, while valid interest directions - after subtraction - are shown in green.

A small group of enemies, switches between pursuit, flee and seek behaviors.

A larger group of enemies navigating around the static collision.

Interactable System

This system processes interactions between (usually) pairs of entities - an interactor and an interactable - through a state machine. The state machine can be conceptually divided into the following stages:

- Positioning both entities at predefined positions (if needed)

- Playing synced animations (if available)

- Handling interaction outcomes

Each interactable entity is assigned an Interactable component. These components support an arbitrary number of defined transitions stored in setup data. A “transition” is the concrete interaction that typically switches the “multi-state” of an interactable from A to B. Each transition defines its:

- Requirements: conditions for triggering the transition (required “multi-state”, angle range, interactor type, etc.)

- Body: instructions for handling the transition (e.g. option to skip certain state-machine steps, what animations to play, etc.)

- Outcome: the resulting “multi-state” or a special action (such as entering a quick-time-event.)

This structure allowed for an automatic context-based choise of the current available interaction by the system. For example, interacting with an object from behind may trigger different animations than interacting from the front.

An example of how the different closed-to-open transitions are chosen based on the interactor character and the interaction direction. The direction ranges defined for this particular chest type are visualized with red lines. The blue cross denotes the interaction position from which the animation will trigger, and can also be defined per transition. In this example - the characters will position themselves a bit further from the chest when interacting near its corner. Some models (like the instrument of the male character) are not rendered due to the NDA.

The system is also responsible for filtering nearby interactables and their transitions. Only one interaction and transition can be available at a time, with the exception for the auto-triggered ones (e.g. picking up an item while stepping on a platform-switch is still possible). A clear view of the filtering hierarchy made it easy to cache out the “potential interactions” to be later used for displaying contextual player-hints (such as “switch character to interact”).

The code that looks for the available transition within one interactable object.

const bool isRadiusExpanded = isThisLastAvailableInteractionInRange && pInteractorComponent->pSetupData->interactionSensorPointData.canExpand;

const float expandedRadius = pInteractibleComponent->interactorSensorSphereData.sensorSphereRadius * ( isRadiusExpanded ? pInteractibleComponent->interactorSensorSphereData.inRangeSphereExpandFactor : 1.0f);

const bool isExclusiveIfExists = pInteractorComponent->exclusiveInteraction == tix::ecs::InvalidEntityId || pInteractibleComponent->interactibleEntityId == pInteractorComponent->exclusiveInteraction;

if (isPointInsideSphere(&isInRange, ¤tSquareDistance, pInteractibleComponent->interactorSensorSphereData.worldSpacePosition, expandedRadius, interactorPoint)

&& isExclusiveIfExists

&& MultistableUtility::isUnlocked(pMultistableComponent)

&& !pInteractorComponent->isInteractionLocked

&& !InteractibleUtility::isInteractedWith(*pInteractibleComponent))

{

bool foundValidTransition = false;

size_t validTransitionIdx = 0u;

for (size_t iTransition = 0u; iTransition < transitionLibrary.transitions.getSize(); iTransition ++)

{

if( areTransitionRequirementsMet(pInteractorMovementState, *pInteractibleComponent, pMultistableComponent, *pQuickTimeEventStorage, transitionLibrary.transitions[iTransition], interactorEntityId)

&&(isInteractorStatic

||

(isMovementStateCorrect(*pInteractorMovementState, horsemanStorage, interactorEntityId)

&& isInteractibleWithinViewAndReachable(¤tAngleDiff, pData->interactibleFacingDirectionCache[interactibleIdx], transitionLibrary.transitions[iTransition], pFacingStorage->getComponentReadOnly(interactorEntityId), interactorPositionComponent, interactiblePositionComponent, pInteractibleComponent->interactorViewAngleRange, pInteractibleComponent->maxHeightDifference)))

&& isInteractorTypeRequirementMet(&potentialInteraction, *pInteractorComponent, playerData, transitionLibrary.transitions[iTransition], interactorEntityId))

{

foundValidTransition = true;

validTransitionIdx = iTransition;

if (transitionLibrary.transitions[iTransition].autoTriggerOption != AutoTrigger_Never && !autoTriggerInteractions.isFull())

{

InteractibleUtility::AvailableInteraction autoTriggerInteraction(pInteractibleComponent->interactibleEntityId, iTransition, transitionLibrary.transitions[iTransition].autoTriggerOption, pInteractibleComponent);

autoTriggerInteractions.pushBack(autoTriggerInteraction);

}

}

}

const bool hasHigherPrio = hasSmallerAngleOrHigherPrio(currentAngleDiff, smallestAngleDiff, static_cast<int> (pInteractibleComponent->interactionPriority), highestPriority);

if (foundValidTransition && (isInteractorStatic || hasHigherPrio))

{

overwriteAvailableInteraction(pInteractorComponent, &highestPriority, &smallestAngleDiff, currentAngleDiff, *pInteractibleComponent, validTransitionIdx, transitionLibrary.transitions[validTransitionIdx]);

}

else if (potentialInteraction.type != PotentialInteractionType::PotentialInteractionType_Count && !isInteractorStatic && !foundValidTransition && hasHigherPrio) // it would have won if not for the requirements check;

{

overwritePotentialInteraction(pInteractorComponent, potentialInteraction);

}

}As the result, the system was versatile enough to support a very diverse set of interactions, without the need to implement them as separate features. Examples of those include: pulling/pushing levers, triggering pressure-platform-switches by walking over them, objects that trigger other objects, initiating dialogues, executing enemies and so on.

Carriable System

This system manages entering and exiting the carrying state, as well as handles specific edge cases. When the player issues a place command, the system performs a number of radial ground checks around the player, to automatically turn them and place the object on a collision-free ground. Picking up objects and socketing them in or out are treated as interactions and are handled by the Interactable System.

Sphere VFX by Adrian Vögtle.

The carried object is parented to the player via the Attachment System, to which I contributed by implementing an attachment type that preserves the child’s original orientation. I also added an interpolation feature, to smoothly align the child’s orientation, facing, and position with that of the parent, with configurable parameters.

Quick Time Event System

This system registers button presses and handles both win and fail conditions. The basic QTE type increases the linear progress value with each player input and decreases it when the player is inactive. The blended progress value interpolates toward the linear one using lerp-damping. A QTE fails if no input is received within a specified time window. The system supports triggering visual and sound effects at defined progress or regression points. These effects are configured per the concrete QTE and are automatically triggered when their conditions are met. The example shown in the video is an interaction that ends in a special QTE action, and has the QTE outcome as the requirements for the transitions “pull the lever to the end” (QTE passed) or “let go” (QTE failed).

The yellow bar represents the linear progress, while the green bar shows the blended (smoothed) progress. A visual effect is triggered at the 20% progress threshold. Lever model by Clemens Petri, animations by Phi Kernbach.

Boss Battle

During development, I took ownership of several enemy units. Adding a new enemy into the runtime involved creating and setting up its entity template, requesting any missing animations, and implementing custom logic within the enemy behavior scripts. The behavior scripting environment was provided by Tobias Opfermann. I’ve chosen to highlight the boss unit, due to its unique design and the high level of interdisciplinary complexity it required. In addition to the boss, the video example contains a separate feature I was responsible for - ground surface types that apply buff/debuff status effects when walked on.

Apart from the 2 health-stages and their attacks, the design incorporated the surroundings of the boss arena. Part of the boss battle logic that I implemented resided inside the level scripts; the level scripting environment provided by Markus Wall. This battle required a temporary spawn of damaging areas, which I implemented as a standalone re-usable feature. The VFX were created by Adrian Vögtle and Alexandra Anokhina, boss animations outsourced from Metricminds. Player combat system created by Pascal Scheuber, whip traversal by Manuela Schildknecht.

The battle includes a special arena-wide attack in which the boss becomes invincible and “summons” trees that must be destroyed before the attack ends. If the player manages to do so, the boss becomess stunned, otherwise the player gets damaged by the explosion. The video shows both outcomes (failure and success), as well as a special ground surface - lava-like terrain around the arena - that applies a status effect to the player.